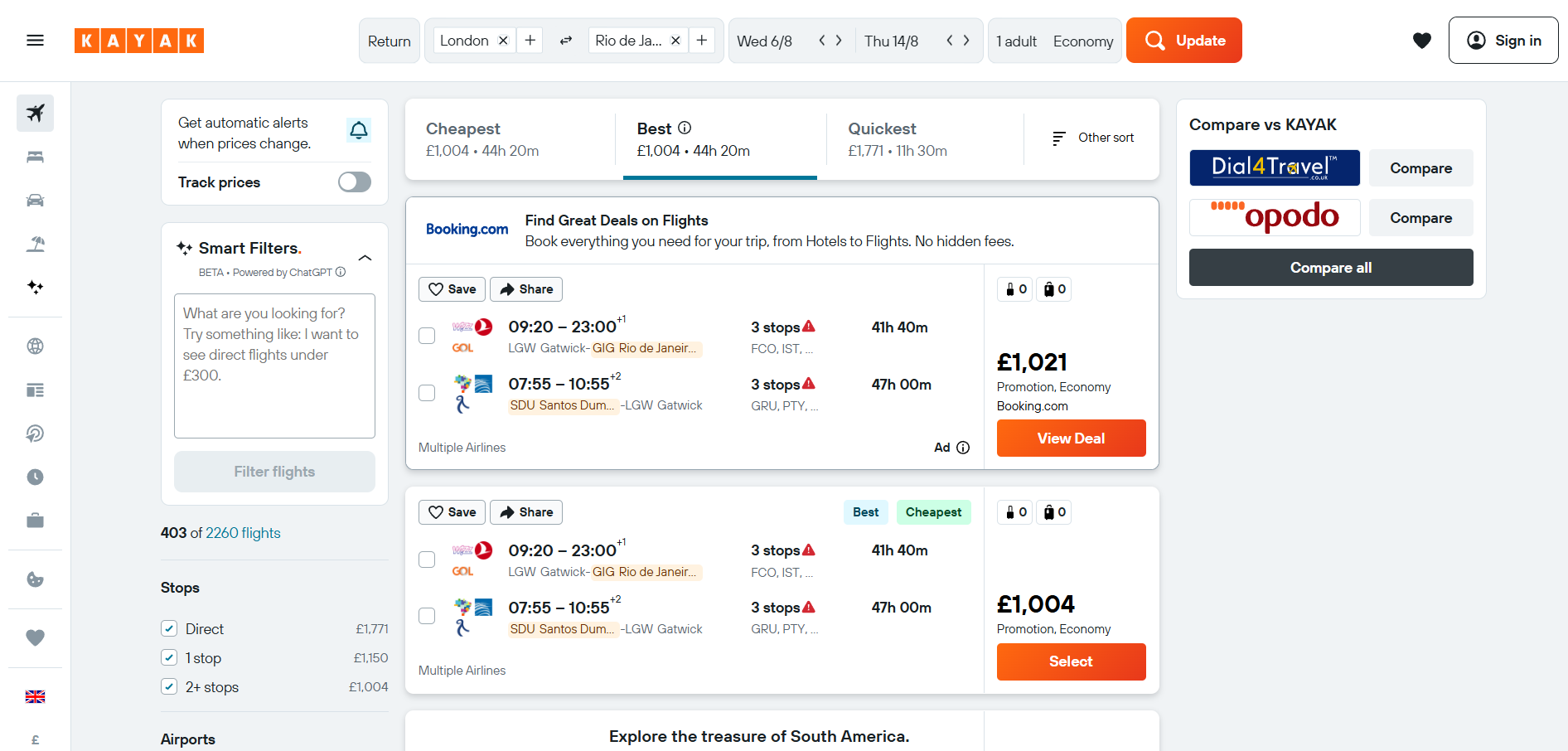

Over a decade ago, I worked on a travel meta-search website. We discovered through UX research that only the most savvy users could effectively utilize the myriad filter options available for flight results. Acknowledging this, we dedicated significant time to optimizing the user experience in that area, but never found a solution that really made filters significantly easier to use.

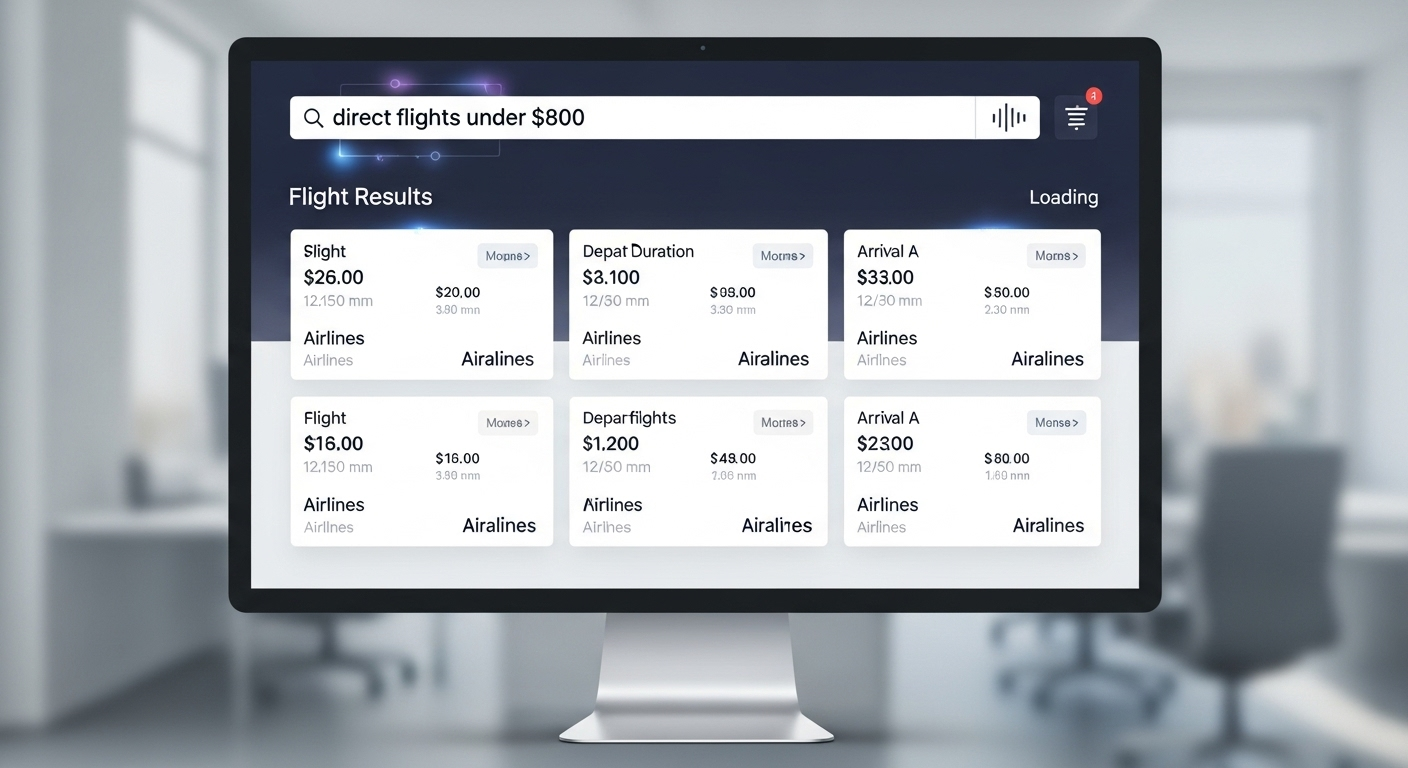

Now, imagine if instead of having to figure out how the filters work and select the ones that reflect what they are looking for, users could simply express what they want using their own language, maybe even using their voice as the input.

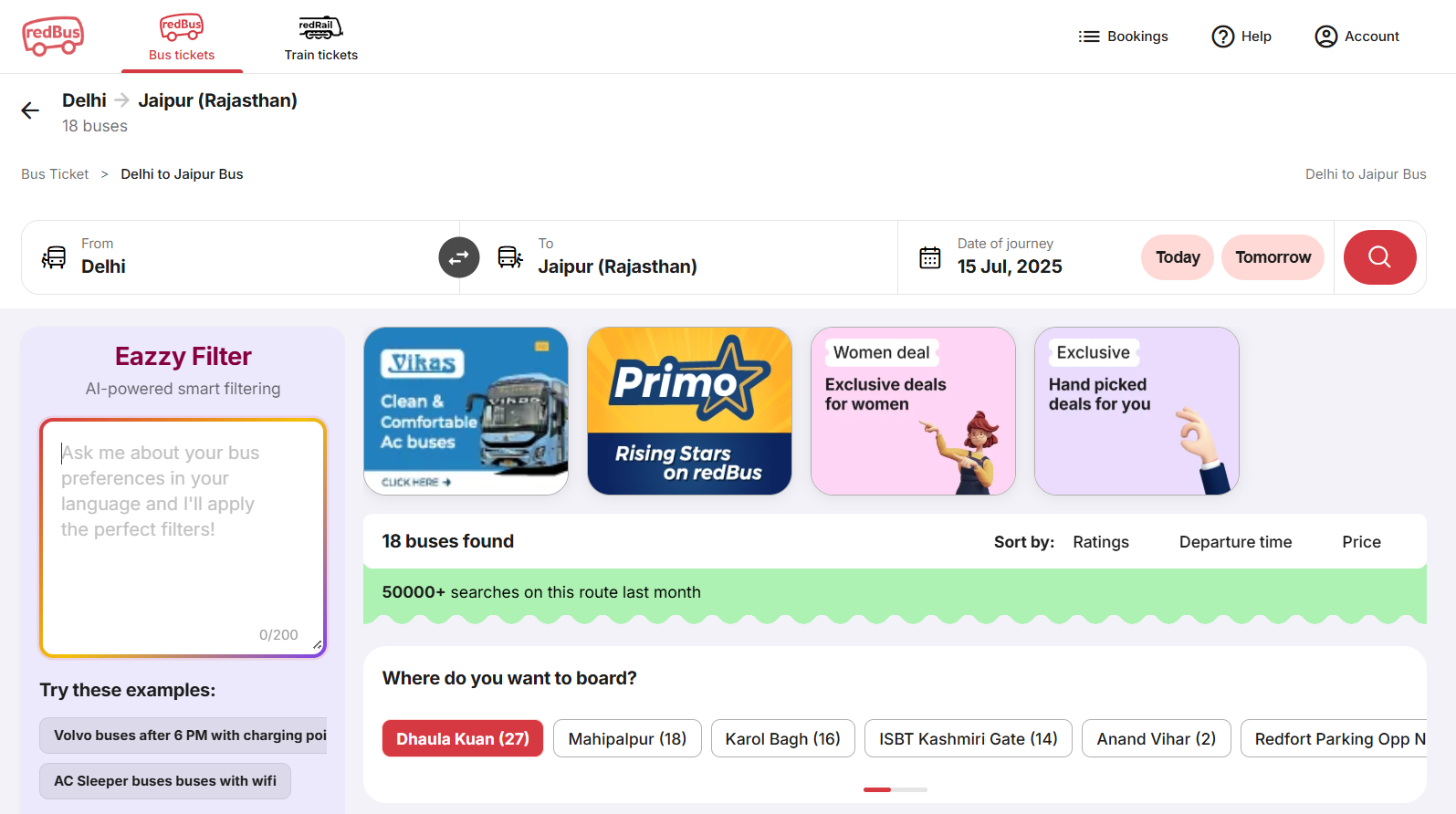

It turns out that, with AI's significant development in the last few years, this is possible today, and there are already sites out there implementing this pattern, like Kayak's Smart Filter feature, or Redbus's Eazzy filter.

And even better, with the help of the Built-in AI APIs the entire process can run on the user's device, with zero cost, without the user's voice or text prompt ever leaving the user's device, and even works offline! Here's a demo application implementing a smart filtering experience that runs on the client-side, and with voice input:

You can also try out the live demo.

To try out the demo above, you will need to enable the Prompt API for Gemini Nano by pointing tour browser to

chrome://flags/#prompt-api-for-gemini-nanoand setting the flag toEnabled. To enable the audio input you will also need to set thechrome://flags/#prompt-api-for-gemini-nano-multimodal-inputflag toEnabled.

But how does this work!?

The core functionality of transforming the user's input in natural language into filter settings uses generative AI in the format of a Large Language Model (LLM), with a feature called structured output, which helps the model generate output in an specific format. The audio input feature also uses a multimodal LLM to transcribe the user's voice into text that is then passed to the core functionality for processing. Let's take a deeper look into how those work.

Transforming natural language input into structured filter configuration

As described in the previous section, the solution utilizes an LLM to build the the core functionality, which transforms the user's query in natural language into a structured filter configuration use.

More specifically, this implementation uses the Built-in Prompt API, which runs on top of a browser provided state of the art LLM, Gemini Nano in Chrome's case. Using an LLM via the Prompt API has the advantage that the model is managed by the browser and, because once it's downloaded the first time it's available to any sites, it may be immediately available on the user machine, avoiding hefty downloads.

While the Built-in Prompt API is generally available on Chrome Extensions, it's currently only available a Chrome Origin Trial for the web on MacOS, Windows and Linux, and Chrome is currently the only browser that provides the API. This means that features that lean on this API should either be Progressive Enhancement or use a hybrid solution, like the Firebase AI Logic.

It's possible to break the process to transform the user's input into a filter configuration in three components:

- A structured output schema which describes the format the model should use to output information, as well as constraints to the output and field descriptions.

- A system prompt describing what the model's goal is, a set of rules and examples to help the model understand how to process the information and, finally, any additional information it may need.

- Handling the user's query, that is, taking the user input and prompting the large language model to extract the information and return it in the required format.

Definining the structured output

The structured output is the glue between the output of the LLM and the filters in the application. The application includes a method where a set of filters can be applied by passing an object with the filter definitions to a method:

interface FilterState { minPrice: number; maxPrice: number; departureAirports: string[]; arrivalAirports: string[]; stops: number[]; airlines: string[]; } const handleSmartFilterChange = (newFilters: FilterState) => { // Filter results providing the state and update the UI. }

The JSON Schema is used to describe the target output for the LLM. In this example, it should describe the FilterState object above, which is the input to handleSmartFilterChange(). This example from the Chrome documentation for using Structured Ouput with the Prompt API explains the steps needed:

const session = await LanguageModel.create(); const schema = { "type": "boolean" }; const post = "Mugs and ramen bowls, both a bit smaller than intended- but that's how it goes with reclaim. Glaze crawled the first time around, but pretty happy with it after refiring."; const result = await session.prompt( `Is this post about pottery?\n\n${post}`, { responseConstraint: schema, } ); console.log(JSON.parse(result));

The

responseConstraintis defined with a JSON Schema, which is a quite powerful format. Make sure to check the documentation for it at jsonschema.org.

The schema for the filter object is much larger than this example, and you can read the whole definition on the project's repository. The following are some tips and best practices identified while writing the schema for this demo application:

- Required fields: in the initial version, the choice was to hide fields when no information about them was available in the user's query, so there were no required fields. Through experimentation, it was identified that the results from the LLM were most consistent when requiring those fields, with default values provided for when they didn't exist in the user's query. For numeric fields, a value of

-1was used as the default value, and the code for handling filters was adapted to handle that. - Field descriptions: field descriptions help the LLM understand the context of each field and add the correct information into them. It's generally better to provide this information in the schema itself, rather then in the prompt engineering.

- Regex fields: the airline are 2 letter strings and airport fields are 3 letter strings. Without providing the pattern description, the LLM would eventually return the airline or airport full names, rather than the 2 or 3 letter codes.

Engineering the system prompt

If the structured output defines what the model output should look like, the system prompt defines how the model should interpret the user's query. This is the step where most of time was spent optimizing, to maximize the cases where the model would interpret the results correctly.

In the Prompt API, the system prompt can be passed to the model when creating a new instance of LanguageModel:

const systemPrompt = '...'; const session = await LanguageModel.create({ initialPrompts: [{ role: "system", content: systemPrompt, }] });

The system prompt can also be passed as a parameter when calling

prompt()orpromptStreaming(). However, using it in the session makes it easier and results in more performance when prompting with the same system prompt.

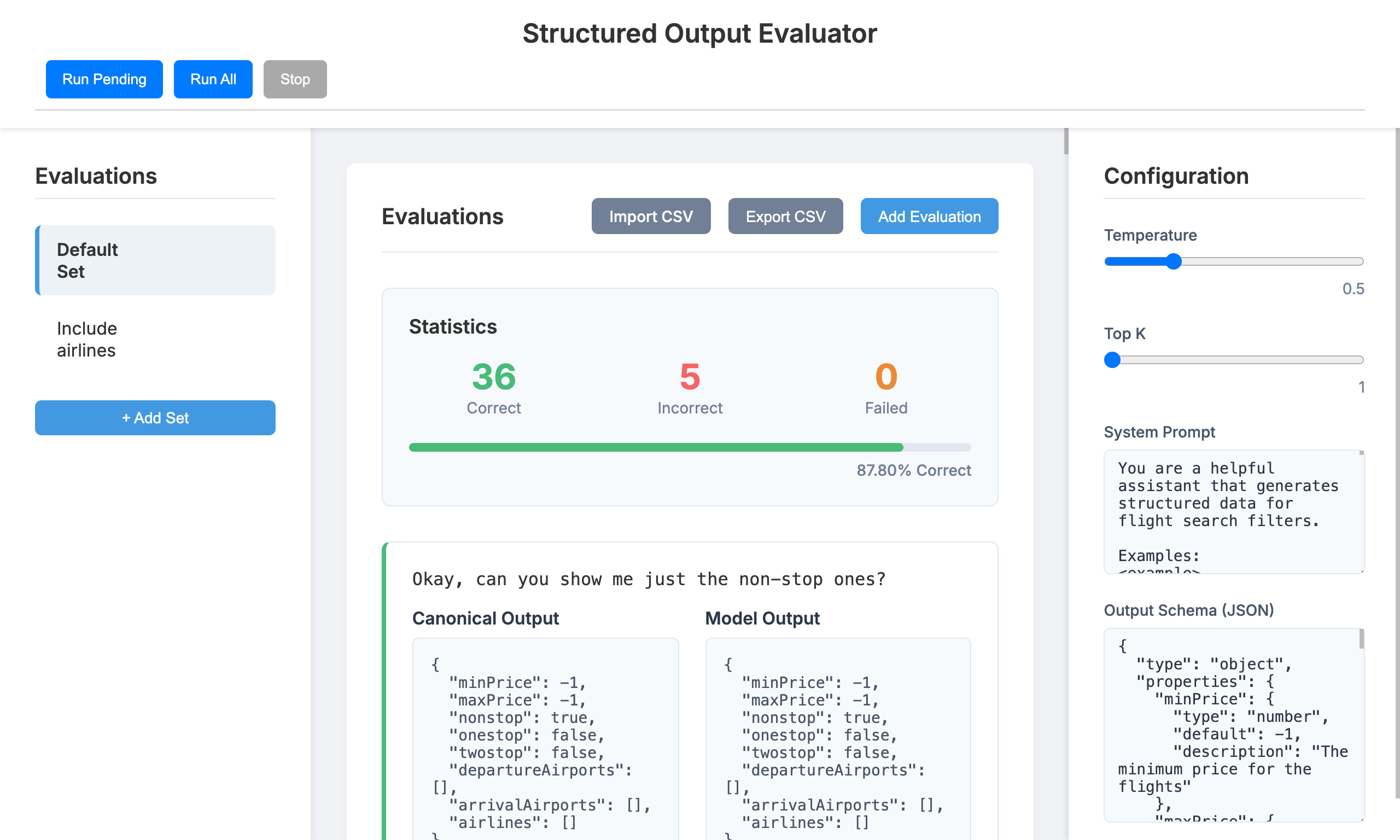

Given prompt engineering is an area where a lot of the time building the application is spent, it's worth using a tool to track progress and regression across different changes and iterations. For this application, a tool to test user queries against prompts and model configuration was created, specifically for the Prompt API and structured output. You can see the output of the the in the screenshot below:

The following notes and recommendations were derived from the prompt engineering work on this application:

- The initial implementation focused on providing a set of rules for the model to follow in the system prompt. But when adding example inputs and outputs (multi-shot prompting), the accuracy of the output increased significantly, going from

~56%to~90%. At that point, it was possible to completely remove the rules and focus on examples, without a penalty in the model's accuracy, resulting in a short and simple base prompt:

You are a helpful assistant that generates structured data for flight search filters.

While the base prompt was short, the system prompt includes a total of 12 examples that help the LLM understand different user queries and expected results. Note that the examples output include all the required fields, which helps the LLM understand when the default values should be used.

<example> <query> Flights under $800 </query> <output> { "minPrice": -1, "maxPrice": 500, "nonstop": false, "onestop": false, "twostop": false, "departureAirports": [], "arrivalAirports": [], "airlines": [] } </output> </example>

- The model would eventually return the wrong code for airlines. Including a list of available airlines and codes into the system made it much more accurate to return airline codes. The following is how the list of airlines were included into the system prompt.

This is a list of airlines and codes available to filter: [ { code: "UA", name: "United Airlines" }, { code: "DL", name: "Delta Air Lines" }, { code: "AA", name: "American Airlines" }, { code: "WN", name: "Southwest Airlines" }, { code: "B6", name: "JetBlue Airways" }, { code: "NK", name: "Spirit Airlines" }, { code: "AS", name: "Alaska Airlines" }, { code: "F9", name: "Frontier Airlines" }, { code: "QF", name: "Quantas Airlines" }, ]

While the same solution could be used for airport codes and names, the model's has been accurate in extracting airport codes from user queries, so the same approach wasn't necessary.

Transform user queries into filter configuration

With all the pieces in place, it now becomes possible to add the code that glues the system prompt, schema and the user query to transform the user's input into a filter configuration. It's important to note that the configuration includes a value of 0.5 for the temperature and 1 for the top-K, which significantly reduces the randomness of the model and causes it to return more consistent results.

Read Understand the Effects of Temperature on Large Language Model Output for considerations on the impact of changing temperature and top-K values on an LLM output.

const systemPrompt = "..."; // content elided for brevity. const schema = {...}; // content elided for brevity. // Create the model session. const session = await LanguageModel.create({ temperature: 0.5, topK: 1, initialPrompts: [{ role: "system", content: systemPrompt, }] }); // Execute the user's on the session, passing the structured output schema as a parameter. const result = await session.prompt(query, { responseConstraint: schema, }); const filterState = JSON.parse(result);

With the AI generated result ready, all that is left to apply the AI generated filter configuration to the application is invoking the code that handles filtering:

handleSmartFilterChange(filterState)

Handling voice input with multimodal

Multimodal models are capable of handing image, audio and sometimes video in their input or output. One potential use-case for those models is to transcribe the user's voice input into text, that can then be plugged in into other parts of the application.

The implementation with the Prompt API is similar to before, with the key differences being that the API is told to expect audio inputs, so it can ensure the right models that support this modality are created, and that the audio blob is handed over to the model as part of the prompt call.

async function getTranscriptionFromAudio(audioBlob) { const session = await LanguageModel.create({ expectedInputs: [{ type: 'audio' }], }); const result = await session.prompt([{ role: 'user', content: [ { type: 'text', value: 'Transcribe this audio' }, { type: 'audio', value: audioBlob }, ] }]); }

The Multimodal functionality for the Prompt API is behind a different flag. To try out the filter, make sure to enable the flags mentioned previously in this article and additionally set

chrome://flags/#prompt-api-for-gemini-nano-multimodal-inputtoEnabled.

Finally, recording the user's voice input can be implemented via the MediaRecorder:

const stream = await navigator.mediaDevices.getUserMedia({ audio: true }); const mediaRecorder = new MediaRecorder(stream); mediaRecorderRef.current = mediaRecorder; audioChunksRef.current = []; mediaRecorder.ondataavailable = (event) => { audioChunksRef.current.push(event.data); }; mediaRecorder.onstop = async () => { const audioBlob = new Blob(audioChunksRef.current, { type: "audio/webm" }); try { const transcription = await getTranscriptionFromAudio(audioBlob); setQuery(transcription); await handleFilter(transcription); } catch (error) { console.error("Error getting transcription:", error); } }; mediaRecorder.start();

Conclusion

This article demonstrated how a developer can use generative AI to build a better filtering experience, using the Built-in Prompt.

Result filters have been an advanced user feature for a long time, and not only for the travel vertical, where it seems to be getting more traction, but across e-commerces, auction sites, or any other user interface where users need to apply filters to get to the result they want.

Generative AI can streamline this user journey, allowing user to explain the results they want in their own language, instead of having to figure out how to use the controls provided by the site, allowing them to get to reach their goals when visiting your site faster.

Comments

Reply

Reply

Leave a comment

Comments are moderated and may take some time to appear.